A New Model of CausationBetween stimulus and response, there is a space. In that space lies our freedom and our power to choose our response.

-Stephen Covey, from “First Things First” by Perry Marshall

The Greek philosopher Aristotle (1) articulated four levels of causation: Material, Formal, Efficient, and Final. While useful and venerated, his categories are fuzzy and have been hotly debated for millennia (2). This has led to endless controversy and confusion. The biggest argument is over Aristotle’s notion of teleology, or “final causes,” especially in scientific circles (3). I offer a new model of causation based on the tools of the modern world including science and technology.

Final Causes?

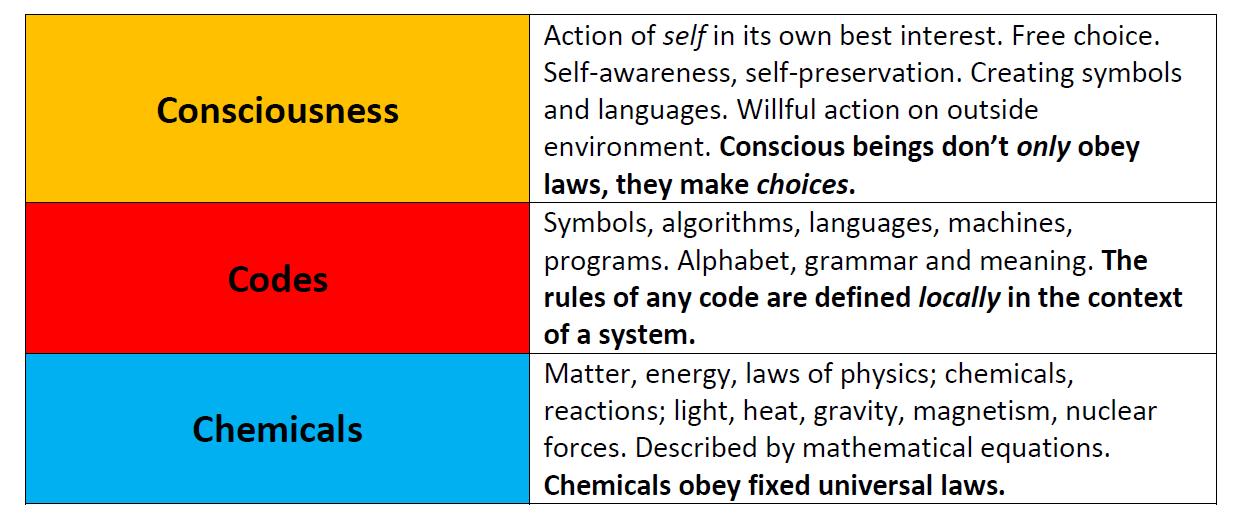

Materialists and reductionists insist that teleology, or purpose in nature – Aristotles’ final cause – is an illusion. Biologist Ernst Mayr said purpose is an artificial construct that we impose on the material world (3). Many say purpose doesn’t actually exist; everything just bubbles up from material causes. Theory and practice of modern technology offer clarity on this point. Information hierarchies in digital communication, which are as empirical as anything in science, provide a framework for solving this problem. New Model: Causation can be neatly and cleanly divided into three levels, with sharp boundaries between all three:

The virtue of this model is that it delineates three non-overlapping levels of causation.

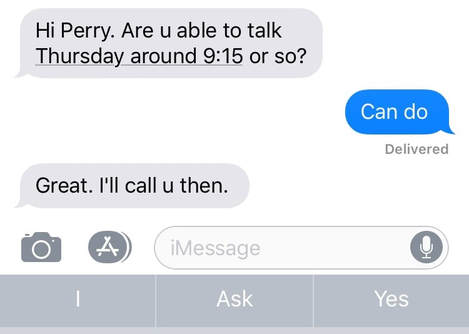

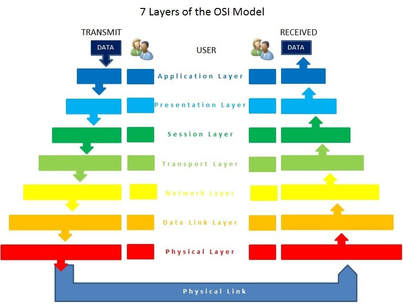

Chemicals: Law-Like Behavior Matter always obeys the laws of physics and chemistry. Physical laws are the same everywhere and at all times, so far as we know. Matter obeys equations. Hence the precision of physics, chemistry, and engineering. We can also define entire classes of emergent behavior. Weather, geology, snowflakes, solar systems; stalagmites, caves, beaches, planets, and galaxies – they all follow law-like interactions of matter and energy. The more precisely you know initial conditions, the better you can predict what the system will do. Although for chaotic or dynamical systems, this can only be done statistically, due to the butterfly effect. In principle, even the most complex system becomes simple once you know the laws. If you know Maxwell’s equations, the periodic table, and a handful of physics formulas, you can model everything that occurs on this level. Codes: Local System Rules You can readily distinguish between universal physical laws and the local laws of codes because codes are symbols. Symbols are freely chosen. The meaning of any symbol can only be defined within the system. The meaning of a string of 1s and 0s like “00100101” is defined entirely by context. Information is measured in bits, not grams or kilometers or watts (4). This is why codes do not belong in the Chemicals category. Information is neither matter nor energy, as Norbert Weiner famously said (5). A bit, “1” or “0,” is a representation or record of a choice. A 64-Gig USB stick holds 549,755,813,888 bits, which are 549,755,813,888 binary choices, or degrees of freedom. When we move from Chemicals to Codes, we encounter an entirely different class of complex system (6). The modern term for this is “algorithm.” (Aristotle might have struggled to relate, since he owned neither iPhones nor Androids.) Our world is run by algorithms: Google and Facebook and GPS; microwave ovens and automatic software updates; automated traffic signals and cruise controls. Algorithms use languages defined in advance and run on specific hardware. You can’t run Microsoft Word on a pocket calculator. You can’t run Mac OS in your Microwave oven. Codes and algorithms are hierarchical. Engineering has a well-known model called the OSI (Open Systems Interconnection) 7-Layer Model. Your computer cable has a physical layer – the copper wire. This wire, layer one, carries a voltage (5 volts = “1,” and 0 volts = “0”), which forms layer two. Layer three is bytes of information that symbolize characters like “ABC” and “123.” And so it goes on up the chain to layer seven – as in the Microsoft Word application, for example (7). Each layer is controlled by the layer above. Each is built on top of the layers below. All information systems, languages, and programs work this way. In human languages, alphabet is the lowest layer. Spelling and words are next; rules of grammar are above those; the intended meaning is the highest layer (8). We’re all familiar with the limitations of computers. Programs crash. Computers don’t program themselves. My computer is dumb as a box of rocks. We talk to Alexa and Siri, but nobody is “home.” Machine learning acquires knowledge but only learns what it’s programmed to learn. Machines that pass the Turing test or exhibit “general intelligence” have eluded us. Luciano Floridi, professor at Oxford’s Alan Turing institute, says true AI doesn’t exist at all (9). This is because none of our machines possess level three. The folks at Apple are trying as hard as they can to get Siri to pass the Turing Test. Currently, Siri cannot even convince a six-year-old that she is a real person. Genetic Algorithms are machine learning programs that use a process resembling Darwinian evolution to write software. They require the user to define a fitness function, and they often need human intervention to achieve their goals. The combinatorial space is so vast that either 1) they get stuck, or 2) they consume infinite resources searching for solutions. Thus it often takes more resources to babysit a GA than to hire a coder or solve the problem some other way. Such problems are well known in machine learning (10). The ingredients GAs lack that humans have are consciousness and volition. If GAs had these attributes, they could set goals and then make decisions based on those goals. Because they cannot set their own goals, humans set goals for them. The effort of establishing such goals often proves far more complex than originally envisioned. Consciousness: Volitional Behavior Definition of consciousness: “In practice, if a subject repeatedly behaves in a purposeful, nonroutine manner that involves the brief retention of information, she, he or it is assumed to be conscious” (11). Consciousness emphasizes the contextual way in which humans uniquely respond to unique situations. Programs are routines. Computers are by definition not capable of behaving in a nonroutine manner. A computer always does only what it is programmed to do. Human beings make choices. We create languages, write programs, build machines. The simple act of making an acronym like “MTC” (“Marshall’s Theory of Causation”) creates a symbol and assigns meaning to it. The fact that humans freely assign meaning to words and symbols is the most basic evidence of our ability to choose. This is precisely what computer programs lack. Two Directions of Causality The three-tier model of Chemicals – Code – Consciousness defines two directions of causality: bottom-up and top-down. In rocks, crystals, sand dunes, tornadoes, hurricanes, meteors, stars, and planets, causation is bottom-up. In communication, with messages, encoding, decoding, instructions, and meaning, causation is top-down. There are currently no known exceptions (12). The $5 million Evolution 2.0 Prize is a search for an exception (13). Similarly, we can make a clear distinction between computers, codes, and algorithms vs. the willful activity of humans. Computers, codes, and algorithms only learn with prior direction from humans. Toddlers learn 24/7/365. A toddler is far smarter than any computer. We can conceptualize conscious free will as a Volitional Turing Machine, where instead of writing a 1 or 0 based on a deterministic program, the conscious agent decides whether to write a 1 or 0. This is how we construct sentences, assign meanings, and build machines, thus creating higher levels of order. This is handily illustrated by autosuggest on a smart phone. Here you see a text from my friend Nathan: Autosuggest offers me three choices: I, Ask, and Yes, based on statistics of prior messages and the English language.

Autosuggest demonstrates the difference between an algorithm and a human. If I'm only permitted the choices it offers (I’m a volitional Turing machine with three options), I can construct a sensible message without ever typing anything. I did a quick experiment and managed to generate this sentence just taking choices it gave me: I don’t think you should get the mail today and you can pick up some stuff at my house and then go get some lunch. If I always pick the first choice autosuggest offers me, I end up with the following: I have a few minutes to do this stuff and then I’ll send you a text message and ask him about it and then I can tell you about it and then I can tell you about it and then I can tell you about it… If I always pick the second choice autosuggest gives me, I get this: Ask me about how much I can get it for you today I can tell you about how about it I can tell you about how about it I can tell you about how about it… You notice the algorithm quickly collapses into a loop of repetitive gibberish. This is common to all algorithms. It makes passing the Turing test extremely difficult. This is because language comes from top-down causation (based on goals of conscious beings), but statistical methods of generating words and sentences are bottom-up. Computers cannot process semantic information (14). A conscious agent is always present or implied above the top layer of every computer message or program. All codes, programs, and algorithms whose origin we know come from conscious beings (15). Passing the Turing test will continue to be a fruitless endeavor until we learn how to endow computers with consciousness. Similarly, strong AI will never emerge from conventional computers. Conscious activity reverses information entropy by creating new information; nothing else is known to do this (15). The best argument for the three-tiered model of causation is simply that it fits all available data. I believe the following is the correct hierarchy of cause and effect:

This sequence conforms to all documented experience. Futurists like Ray Kurzweil tell us that Moore’s law guarantees computers will double in speed every two years. Thus it’s only a matter of time before a “Singularity,” when computers become smarter than humans. Eventually we will upload ourselves into cyberspace and achieve immortality in the “cloud.” Until man-made machines can transcend the limitations of algorithms, self-evolve, and pass the Turing Test, we have no good reason to endorse the Singularity theory (14). The Singularity is a mythical narrative because, though embraced by intellectual elites, it is based on a backwards understanding of causation. Consciousness creates codes, but codes have never been shown to create consciousness. This brings us to Aristotle’s question of final causes. Human beings ostensibly have purposes and goals. We are teleological. The purpose of our drive in the morning is to take us to work. In a lesser way, computers and codes are also teleological. In ASCII the purpose of 1000001 is to represent the letter “a.” In your email program, the purpose of the “send” button is to transmit your message to another computer. Computers and codes have pre-programmed goals; as conscious beings we choose our goals. This does not address the question of ultimate purposes. I do not attempt to answer this question here. Until we understand what consciousness is, we may not be able to answer it at all. What we can say is that purpose is not illusory; that humans and programs appear purposeful because they are purposeful; and that consciousness is not known to be an intrinsic property of matter. Rather, consciousness controls and manipulates matter by means we presently do not understand. References

*Image source: Wikipedia https://lt.wikipedia.org/wiki/Vaizdas:OSIModel.jpgLAWS, LANGUAGE and LIFEHoward Pattee’s classic papers on the physics of symbols with contemporary commentaryAuthors: Pattee, Howard Hunt, Rączaszek-Leonardi, Joanna |

Perry Marshall is author of Evolution 2.0: Breaking the Deadlock Between Darwin and Design. He is founder of the $5 million Evolution 2.0 Prize, staffed by judges from Harvard, Oxford and MIT, at www.evo2.org.

Perry is a software engineer and entrepeneur. His reinvention of the Pareto Principle is published in Harvard Business Review, and his 80/20 Curve is used as a productivity tool at NASA's Jet Propulsion Labs at the California Institute of Technology. He is endorsed by FORBES and INC Magazine. |